Courtesy of today's Australian.

Monday, November 26, 2012

Sunday, November 25, 2012

Probability of forming Majority Government

As I build a robust aggregated poll, one question I am wrestling with is how to present the findings. If we start with the trend line from my naive aggregation of the two-party preferred (TPP) polls ...

I am looking at using a Monte Carlo technique to convert this TPP poll estimate to the probability of forming majority government. What this technique shows (below) is that the party with 51 per cent or more of the two-party preferred vote is almost guaranteed to form majority government.

There is also a realm where the two-party preferred vote is close to 50-50. In this realm, the likely outcome is a hung Parliament. When both lines fall below the 50 per cent threshold, this model is predicting a hung Parliament.

I am looking at using a Monte Carlo technique to convert this TPP poll estimate to the probability of forming majority government. What this technique shows (below) is that the party with 51 per cent or more of the two-party preferred vote is almost guaranteed to form majority government.

There is also a realm where the two-party preferred vote is close to 50-50. In this realm, the likely outcome is a hung Parliament. When both lines fall below the 50 per cent threshold, this model is predicting a hung Parliament.

Saturday, November 24, 2012

Australian 5 Poll Aggregation

I have spend the past week collecting polling data from the major polling houses. Nonetheless, I still have a ways to go in my project of building a Bayesian-intelligent aggregated poll model. Indeed, I have a ways to go on the simple task of data collection.

I can, however, plot some naive aggregations and talk a little of their limitations. These naive aggregations take all (actually most at this stage in their development) of the published polls from houses of Essential Media, Morgan, Newspoll and Nielsen. I place a localised 90-day regression over the data (the technique is called LOESS) and voila: a naive aggregation of the opinion polls. Let's have a look. We start with the aggregations of the primary vote share.

And on to the two-party-preferred aggregations.

So what are the problems with these naive aggregations? The first problem is that all of the individual poll reports are equally weighted in the final aggregation, regardless of the sample size of the poll. The aggregation also does not weight those polls that are consistently closer to the population mean at a point in time compared with those polls that exhibit higher volatility.

The second problem is that Essential Media is over-represented. Because its weekly reports are an aggregation of two weeks worth of polling, each weekly poll effectively appears twice in the aggregation. In the main, the other polling houses do not do this (although, Morgan does very occasionally). Essential Media also exhibits a number of artifacts that has me thinking about its sampling methodology and whether I need to make further adjustments in my final model. I should be clear here, these are not problems with the Essential Media reports; it is just a challenge when it comes to including Essential polls in a more robust aggregation.

The third problem is that the naive aggregation assumed that individual "house effects" balance each other out. Each polling house has its own approach. These differences go to hundreds of factors, including:

The fourth problem is that population biases are not accounted for. In most contexts there is a social desirability bias with which the pollsters must contend. When I attended an agricultural high school in south west New South Wales there was a social desirability bias among my classmates towards the Coalition. When I subsequently went to university and worked in the welfare sector there was a social desirability bias among my colleagues and co-workers towards Labor. At the national level, there is likely to be an overall bias in one direction or the other. Even if only one or two in a hundred people feel some shame or embarrassment in admitting their real polling intention to the pollster, this can affect the final results. The nasty thing about population biases is that they affect all polling houses in the same direction.

If these problems can be calibrated, they can be corrected in a more robust polling model, This would allow for a far more accurate population estimate of voting intention. An analogy can be made with a watch that is running five minutes slow. If you know that your watch is running slow, and how slow it is, you can mentally make the adjustment to arrive at meetings on time. But if you mistakenly believe your watch is on-time, you will regularly miss the start of meetings. With a more accurate estimate of voting intention, it is possible to better identify the probability of a Labor or Coalition win (or a hung Parliament) following the 2013 election.

Fortunately, there is a range of classical and Bayesian techniques that can be applied to each of the above problems. And that is what I will be working on for the next few weeks.

Caveats: please note, the above charts should be taken as an incomplete progress report. I am still cleaning the data I have collected (and I have more to collect). And these charts suffer from all of the problems I have outlined above, and then some more. Just because the aggregation says the Coalition two-party-preferred vote is currently sitting at 51.6 per cent, there is no guarantee that is the case.

And a thank you: I would like to thank George from Poliquant who shared his data with me. it helped me enormously in getting this far.

Update: As I refine my underlying production of these graphs and find errors or improvements, I am updating this page.

I can, however, plot some naive aggregations and talk a little of their limitations. These naive aggregations take all (actually most at this stage in their development) of the published polls from houses of Essential Media, Morgan, Newspoll and Nielsen. I place a localised 90-day regression over the data (the technique is called LOESS) and voila: a naive aggregation of the opinion polls. Let's have a look. We start with the aggregations of the primary vote share.

And on to the two-party-preferred aggregations.

So what are the problems with these naive aggregations? The first problem is that all of the individual poll reports are equally weighted in the final aggregation, regardless of the sample size of the poll. The aggregation also does not weight those polls that are consistently closer to the population mean at a point in time compared with those polls that exhibit higher volatility.

The second problem is that Essential Media is over-represented. Because its weekly reports are an aggregation of two weeks worth of polling, each weekly poll effectively appears twice in the aggregation. In the main, the other polling houses do not do this (although, Morgan does very occasionally). Essential Media also exhibits a number of artifacts that has me thinking about its sampling methodology and whether I need to make further adjustments in my final model. I should be clear here, these are not problems with the Essential Media reports; it is just a challenge when it comes to including Essential polls in a more robust aggregation.

The third problem is that the naive aggregation assumed that individual "house effects" balance each other out. Each polling house has its own approach. These differences go to hundreds of factors, including:

- whether the primary data is collected over the phone, in a face-to-face interview, or online,

- how the questions are asked including the order in which the questions are asked,

- the options given or not given to respondents,

- how the results are aggregated and weighted in the final report,

- and so on.

The fourth problem is that population biases are not accounted for. In most contexts there is a social desirability bias with which the pollsters must contend. When I attended an agricultural high school in south west New South Wales there was a social desirability bias among my classmates towards the Coalition. When I subsequently went to university and worked in the welfare sector there was a social desirability bias among my colleagues and co-workers towards Labor. At the national level, there is likely to be an overall bias in one direction or the other. Even if only one or two in a hundred people feel some shame or embarrassment in admitting their real polling intention to the pollster, this can affect the final results. The nasty thing about population biases is that they affect all polling houses in the same direction.

If these problems can be calibrated, they can be corrected in a more robust polling model, This would allow for a far more accurate population estimate of voting intention. An analogy can be made with a watch that is running five minutes slow. If you know that your watch is running slow, and how slow it is, you can mentally make the adjustment to arrive at meetings on time. But if you mistakenly believe your watch is on-time, you will regularly miss the start of meetings. With a more accurate estimate of voting intention, it is possible to better identify the probability of a Labor or Coalition win (or a hung Parliament) following the 2013 election.

Fortunately, there is a range of classical and Bayesian techniques that can be applied to each of the above problems. And that is what I will be working on for the next few weeks.

Caveats: please note, the above charts should be taken as an incomplete progress report. I am still cleaning the data I have collected (and I have more to collect). And these charts suffer from all of the problems I have outlined above, and then some more. Just because the aggregation says the Coalition two-party-preferred vote is currently sitting at 51.6 per cent, there is no guarantee that is the case.

And a thank you: I would like to thank George from Poliquant who shared his data with me. it helped me enormously in getting this far.

Update: As I refine my underlying production of these graphs and find errors or improvements, I am updating this page.

Monday, November 19, 2012

Nielsen

Today's Nielsen (probably the last Nielsen poll for 2012) was pretty much business as usual. In terms of how those polled said they would cast their preferences, the result was 48-52 against the government.

When the pollster applied preferences in accord with how they flowed at the last election the result was 47-53.

The primary vote story is as follows.

When the pollster applied preferences in accord with how they flowed at the last election the result was 47-53.

The primary vote story is as follows.

Sunday, November 18, 2012

Cube Law

I have spent the past few days playing with Bayesian statistics, courtesy of JAGS (which is a Markov chain Monte Carlo (MCMC) engine where the acronym stands for Just Another Gibbs Sampler).

The problem I have been wrestling with is what the British call the Cube Law. In first past the post voting systems, with a two-party outcome, the Cube Law asserts that the ratio of seats a party wins at an election is approximately a cube of the ratio of votes the party won in that election. We can express this algebraically as follows (where s is the proportion of seats won by a party and v is the proportion of votes won by the party). Both s and v lie in the range from 0 to 1.

My question was whether the relationship held up under Australia's two-party-preferred voting system. For the record, I came across this formula in Simon Jackman's rather challenging text: Bayesian Analysis for the Social Sciences.

My first challenge was to make the formula tractable for analysis. I could not work out how Jackman did his analysis (in part because I could not work out how to generate an inverse gamma distribution from within JAGS, and it did not dawn on me initially to just use normal distributions). So I decided to pick at the edges of the problem and see if there was another way to get to grips with it. There are a few ways of algebraically rearranging the Cube Law identity. In the first of the following equations, I have made the power term (relabeled k) the subject of the equation, In the second, I made the proportion of seats won the subject of the equation.

In the end, I decided to run with the second equation, largely because I thought it could be modeled simply from the beta distribution which provides diverse output in the range 0 to 1. The next challenge was to construct a linking function from the second equation to the beta distribution. I am not sure whether my JAGS solution is efficient or correct, but here goes (constructive criticism welcomed).

The results were interesting. I used the Wikipedia data for Federal elections since 1937. And I framed the analysis from the ALP perspective (ALP TPP vote share and the ALP proportion of seats won).

The mean result for k was 2.94. The posterior distribution for k had a 95% credibility interval between 2.282 and 3.606. The median in the posterior distribution was 2.939 (pretty well the same as the mean; and both were very close to the magical 3 of the Cube Law). It would appear that the Federal Parliament, in terms of the ALP share of TPP vote and seats won operates pretty close to the Cube Law. The distribution of k, over 4 chains each with 50,000 iterations of the MCMC was:

The files I used in this analysis can be found here.

Technical follow-up: Simon Jackman deals with the Cube Law with what looks like an equation from a classical linear regression of logits (logs of odds). The core of this regression equation is as follows:

By way of comparison, the k in my equation is algebraically analogous to the β1 in Jackman's equation. Our results are close: I found a mean of 2.94, Jackman a mean of 3.04. In my equation, I implicitly treat β0 as zero. Jackman found a mean of -0.11. He uses the β0 to asses bias in the electoral system. Nonetheless, the density kernel I found for k (below) looks very similar to the kernel Jackman found for his β1 on page 149 of his text. [This last result may surprise a little as my data spanned the period 1937 to 2010, while Jackman's data spanned a shorter period: 1949 to 2004].

I suspect the pedagogic point of this example in Jackman's text was the demonstration of a particular "improper" prior density and the use of its conjugate posterior density. I suspect I could have used Jackman's approach with normal priors and posteriors. For me it was a useful learning experience looking at other approaches as a result of not knowing how get an inverse gamma distribution working in JAGS. Nonetheless, if you know how to do the inverse gamma, please let me know.

The problem I have been wrestling with is what the British call the Cube Law. In first past the post voting systems, with a two-party outcome, the Cube Law asserts that the ratio of seats a party wins at an election is approximately a cube of the ratio of votes the party won in that election. We can express this algebraically as follows (where s is the proportion of seats won by a party and v is the proportion of votes won by the party). Both s and v lie in the range from 0 to 1.

My question was whether the relationship held up under Australia's two-party-preferred voting system. For the record, I came across this formula in Simon Jackman's rather challenging text: Bayesian Analysis for the Social Sciences.

My first challenge was to make the formula tractable for analysis. I could not work out how Jackman did his analysis (in part because I could not work out how to generate an inverse gamma distribution from within JAGS, and it did not dawn on me initially to just use normal distributions). So I decided to pick at the edges of the problem and see if there was another way to get to grips with it. There are a few ways of algebraically rearranging the Cube Law identity. In the first of the following equations, I have made the power term (relabeled k) the subject of the equation, In the second, I made the proportion of seats won the subject of the equation.

In the end, I decided to run with the second equation, largely because I thought it could be modeled simply from the beta distribution which provides diverse output in the range 0 to 1. The next challenge was to construct a linking function from the second equation to the beta distribution. I am not sure whether my JAGS solution is efficient or correct, but here goes (constructive criticism welcomed).

model {

# likelihood function

for(i in 1:length(s)) {

s[i] ~ dbeta(alpha[i], beta[i]) # s is a proportion between 0 and 1

alpha[i] <- theta[i] * phi

beta[i] <- (1-theta[i]) * phi

theta[i] <- v[i]^k / ( v[i]^k + (1 - v[i])^k ) # Cube Law

}

# prior distributions

phi ~ dgamma(0.01, 0.01)

k ~ dnorm(0, 1 / (sigma ^ 2)) # vaguely informative prior

sigma ~ dnorm(0, 1/10000) I(0,) # uninformative prior, positive

}

The results were interesting. I used the Wikipedia data for Federal elections since 1937. And I framed the analysis from the ALP perspective (ALP TPP vote share and the ALP proportion of seats won).

The mean result for k was 2.94. The posterior distribution for k had a 95% credibility interval between 2.282 and 3.606. The median in the posterior distribution was 2.939 (pretty well the same as the mean; and both were very close to the magical 3 of the Cube Law). It would appear that the Federal Parliament, in terms of the ALP share of TPP vote and seats won operates pretty close to the Cube Law. The distribution of k, over 4 chains each with 50,000 iterations of the MCMC was:

The files I used in this analysis can be found here.

Technical follow-up: Simon Jackman deals with the Cube Law with what looks like an equation from a classical linear regression of logits (logs of odds). The core of this regression equation is as follows:

By way of comparison, the k in my equation is algebraically analogous to the β1 in Jackman's equation. Our results are close: I found a mean of 2.94, Jackman a mean of 3.04. In my equation, I implicitly treat β0 as zero. Jackman found a mean of -0.11. He uses the β0 to asses bias in the electoral system. Nonetheless, the density kernel I found for k (below) looks very similar to the kernel Jackman found for his β1 on page 149 of his text. [This last result may surprise a little as my data spanned the period 1937 to 2010, while Jackman's data spanned a shorter period: 1949 to 2004].

I suspect the pedagogic point of this example in Jackman's text was the demonstration of a particular "improper" prior density and the use of its conjugate posterior density. I suspect I could have used Jackman's approach with normal priors and posteriors. For me it was a useful learning experience looking at other approaches as a result of not knowing how get an inverse gamma distribution working in JAGS. Nonetheless, if you know how to do the inverse gamma, please let me know.

Tuesday, November 13, 2012

State swings

Yesterday we looked at the distribution of individual seat swings around the national swing. The short answer is that these swings appear to be normally distributed.

Today, I want to look at distribution of individual seat swings around the swing for the state in which the seat is located. In this process, I have ignored the territories because there are too few seats to analyse. The overall analysis (below) is not as neat as the national picture (above).

Compared to the normal distribution, this distribution is a touch leptokurtic (taller/narrower than the normal curve). Nonetheless, it is probably close enough to the normal curve to use the normal curve in election simulations.

The good news is the reduction is the standard deviation that can be achieved if I am able to estimate the state-by-state swings in real time. That is my next challenge. I am looking at a range of related strategies to achieve this including: Hidden Markov and State Space Models, dynamic linear models and Kalman filters.

For completeness, the the next set of charts is the result for each state. In each the smaller standard deviation is evident.

Today, I want to look at distribution of individual seat swings around the swing for the state in which the seat is located. In this process, I have ignored the territories because there are too few seats to analyse. The overall analysis (below) is not as neat as the national picture (above).

Compared to the normal distribution, this distribution is a touch leptokurtic (taller/narrower than the normal curve). Nonetheless, it is probably close enough to the normal curve to use the normal curve in election simulations.

The good news is the reduction is the standard deviation that can be achieved if I am able to estimate the state-by-state swings in real time. That is my next challenge. I am looking at a range of related strategies to achieve this including: Hidden Markov and State Space Models, dynamic linear models and Kalman filters.

For completeness, the the next set of charts is the result for each state. In each the smaller standard deviation is evident.

Newspoll 51-49 in the Coalition's favour

The Australian has released the latest Newspoll survey of voting intentions.The headline result is in line with the changing fortunes since the Coalition's peak at the end of April 2012. The Poll Bludger also has the details.

What would this mean at the next election? If the national vote was 51-49, the 100,000 election simulation suggests the most likely outcome would be a Coalition win.

The primary voting intentions were as follows. Of note is the decline in the Greens' fortunes.

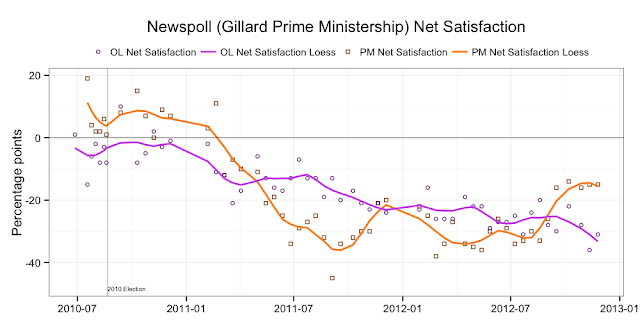

Moving to the attitudinal questions. Ms Gillard is reasserting her position as the preferred Prime Minister. Her net satisfaction rating is also improving.

And, to put a couple of those charts in some historical perspective ...

What would this mean at the next election? If the national vote was 51-49, the 100,000 election simulation suggests the most likely outcome would be a Coalition win.

The primary voting intentions were as follows. Of note is the decline in the Greens' fortunes.

Moving to the attitudinal questions. Ms Gillard is reasserting her position as the preferred Prime Minister. Her net satisfaction rating is also improving.

And, to put a couple of those charts in some historical perspective ...

Monday, November 12, 2012

How are election swings distributed?

Taking the seat-by-seat swings for each Federal election since 1993, I was interested to see how they were distributed around the national swing in two party preferred voting outcomes compared with the previous election. I was conscious that a lot of financial data is not normally distributed (fat tails are common in financial distributions). I was wanting to check my use of a normal probability function in the simulation of election results.

First, let's look at the distribution of swings for each of the federal elections. The y-axis in the first graph (and the x-axis in the subsequent graphs) have the metric of percentage points.

Of note in the first plot, it is not unusual in an election to have one or two seats that buck the national swing by around plus or minus ten percentage points. In 1996, the outliers were swings of +17 and -14 percentage points compared to the national average.

These distributions can be compared with the normal curve (dashed in the next plot).

Combining the seven elections we can see in the next plot that the overall distribution of seat swings is normally distributed around the national swing. The normal curve in the next plot is super imposed with a red dashed line over the probability density function for seat-by-seat swings compared with the national swing for seven elections. Both curves have a standard deviation of 3.27459.

This means I can use a normal distribution in Monte Carlo simulations of the 2013 election result.

The data for this analysis came from the Australian Electoral Commission website.

First, let's look at the distribution of swings for each of the federal elections. The y-axis in the first graph (and the x-axis in the subsequent graphs) have the metric of percentage points.

Of note in the first plot, it is not unusual in an election to have one or two seats that buck the national swing by around plus or minus ten percentage points. In 1996, the outliers were swings of +17 and -14 percentage points compared to the national average.

These distributions can be compared with the normal curve (dashed in the next plot).

Combining the seven elections we can see in the next plot that the overall distribution of seat swings is normally distributed around the national swing. The normal curve in the next plot is super imposed with a red dashed line over the probability density function for seat-by-seat swings compared with the national swing for seven elections. Both curves have a standard deviation of 3.27459.

This means I can use a normal distribution in Monte Carlo simulations of the 2013 election result.

The data for this analysis came from the Australian Electoral Commission website.

Saturday, November 10, 2012

2013 Federal Election Simulation

Following his success at predicting the US Presidential election for 2012, I decided to read Nate Silver's book: The Signal and the Noise. I am enjoying it so far.

It inspired me to throw together a quick and dirty Monte Carlo simulation of the Australian 2013 Federal Election based on the current state of national opinion polling (assuming the Coalition would win 52.5 per cent of the two party preferred vote). In effect, the simulation runs the election 100,000 times collating the range of potential outcomes given the current polling.

The result is a probability distribution of likely seats won for each party. The most likely outcome is 63 seats for the ALP and 85 seats for the Coalition. It has a more than 50 per cent chance of being between 62 and 65 seats for the ALP, and between 83 and 86 seats for the Coalition.

I am assuming that Wilkie would retain Denison and that Katter would retain Kennedy. For the other seats currently held by the greens and independents, I am assuming they would return to the major parties. I am following Poliquant's logic on this. I used Antony Green's pendulum for some of the underlying arithmetic.

This is a very naive model! Over coming months I plan to refine it. Refinements would include better treatment of the non-major-party seats, adding state-by-state opinion polling results, accounting for systemic bias from the various pollsters (what Simon Jackman calls "house effects"), and a sprinkling of Bayesian intelligence. As we get closer to the election I will look at ballot position and whether the seat contest has a retiring member or not.

In addition to the above national totals, the model could also provide seat-by-seat probabilities. I have not written code for this as yet, but it should not take too long.

Update

Well, I have now written some code to aggregate the individual seat results from each simulation run. I have made some minor modifications to the way in which the second model manages the seats held by others. I have also tidied the plots a little. Nonetheless, it is still a very naive model. I have a long way to go before I am comfortable that it is making robust predictions on the available data.

The updated charts follow for the new 100,000 elections simulation:

The R code for these models can be found here. As always, if you see any errors in the code, or ways I can improve the analysis, please drop me a line.

It inspired me to throw together a quick and dirty Monte Carlo simulation of the Australian 2013 Federal Election based on the current state of national opinion polling (assuming the Coalition would win 52.5 per cent of the two party preferred vote). In effect, the simulation runs the election 100,000 times collating the range of potential outcomes given the current polling.

The result is a probability distribution of likely seats won for each party. The most likely outcome is 63 seats for the ALP and 85 seats for the Coalition. It has a more than 50 per cent chance of being between 62 and 65 seats for the ALP, and between 83 and 86 seats for the Coalition.

I am assuming that Wilkie would retain Denison and that Katter would retain Kennedy. For the other seats currently held by the greens and independents, I am assuming they would return to the major parties. I am following Poliquant's logic on this. I used Antony Green's pendulum for some of the underlying arithmetic.

This is a very naive model! Over coming months I plan to refine it. Refinements would include better treatment of the non-major-party seats, adding state-by-state opinion polling results, accounting for systemic bias from the various pollsters (what Simon Jackman calls "house effects"), and a sprinkling of Bayesian intelligence. As we get closer to the election I will look at ballot position and whether the seat contest has a retiring member or not.

In addition to the above national totals, the model could also provide seat-by-seat probabilities. I have not written code for this as yet, but it should not take too long.

Update

Well, I have now written some code to aggregate the individual seat results from each simulation run. I have made some minor modifications to the way in which the second model manages the seats held by others. I have also tidied the plots a little. Nonetheless, it is still a very naive model. I have a long way to go before I am comfortable that it is making robust predictions on the available data.

The updated charts follow for the new 100,000 elections simulation:

The R code for these models can be found here. As always, if you see any errors in the code, or ways I can improve the analysis, please drop me a line.

Subscribe to:

Posts (Atom)

+hma.png)